Project: Sourcegraph

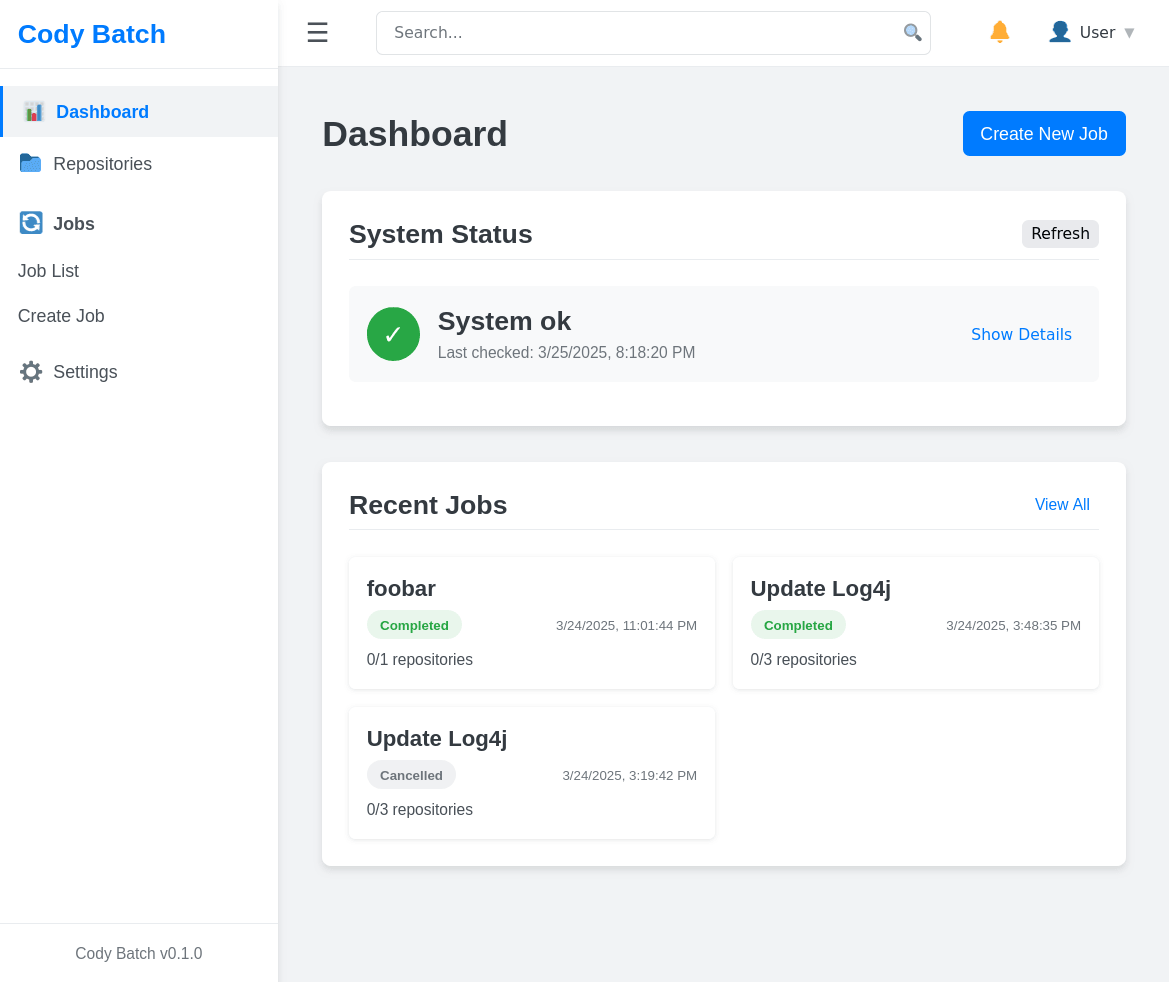

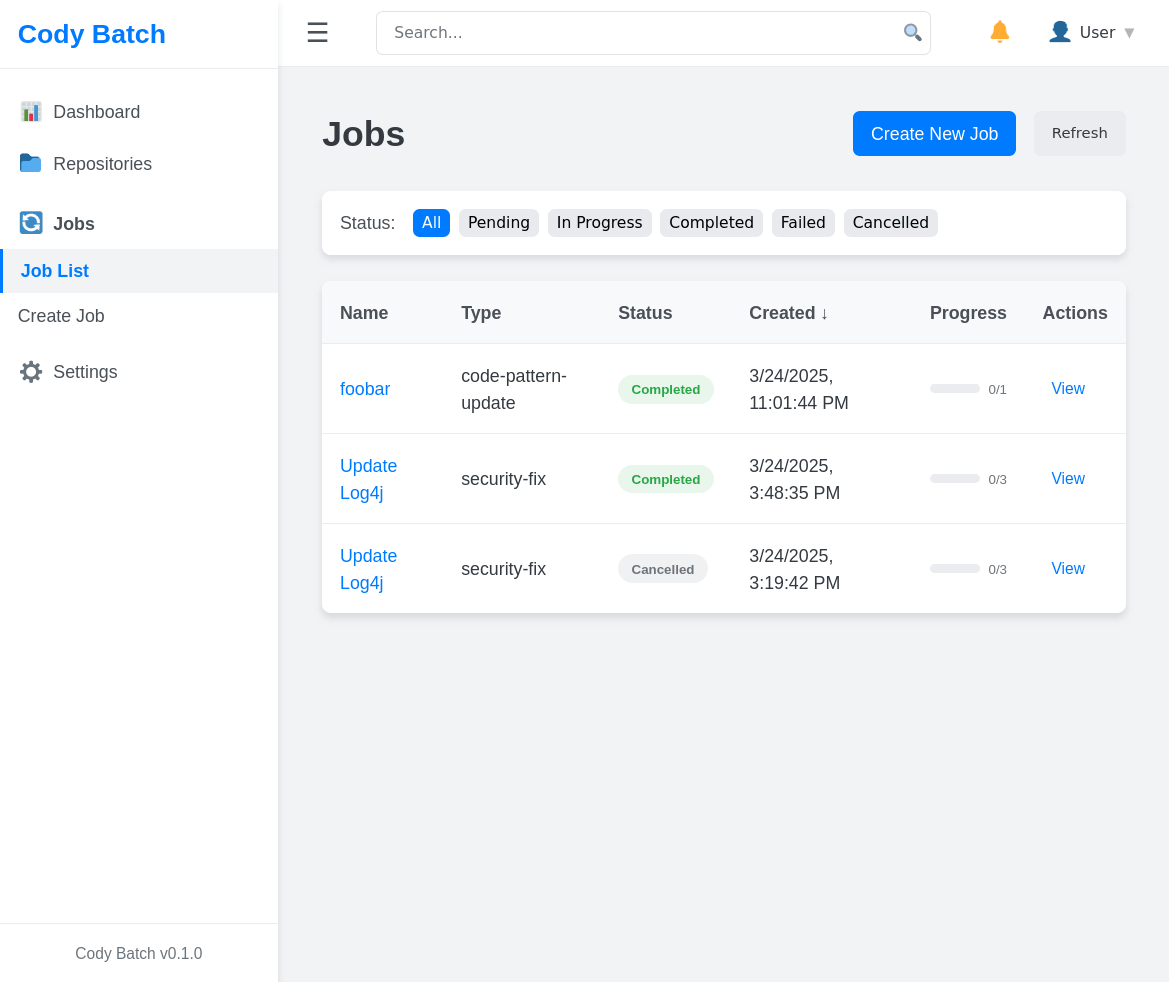

Cody Batch - Autonomous Repository Remediation

Sourcegraph

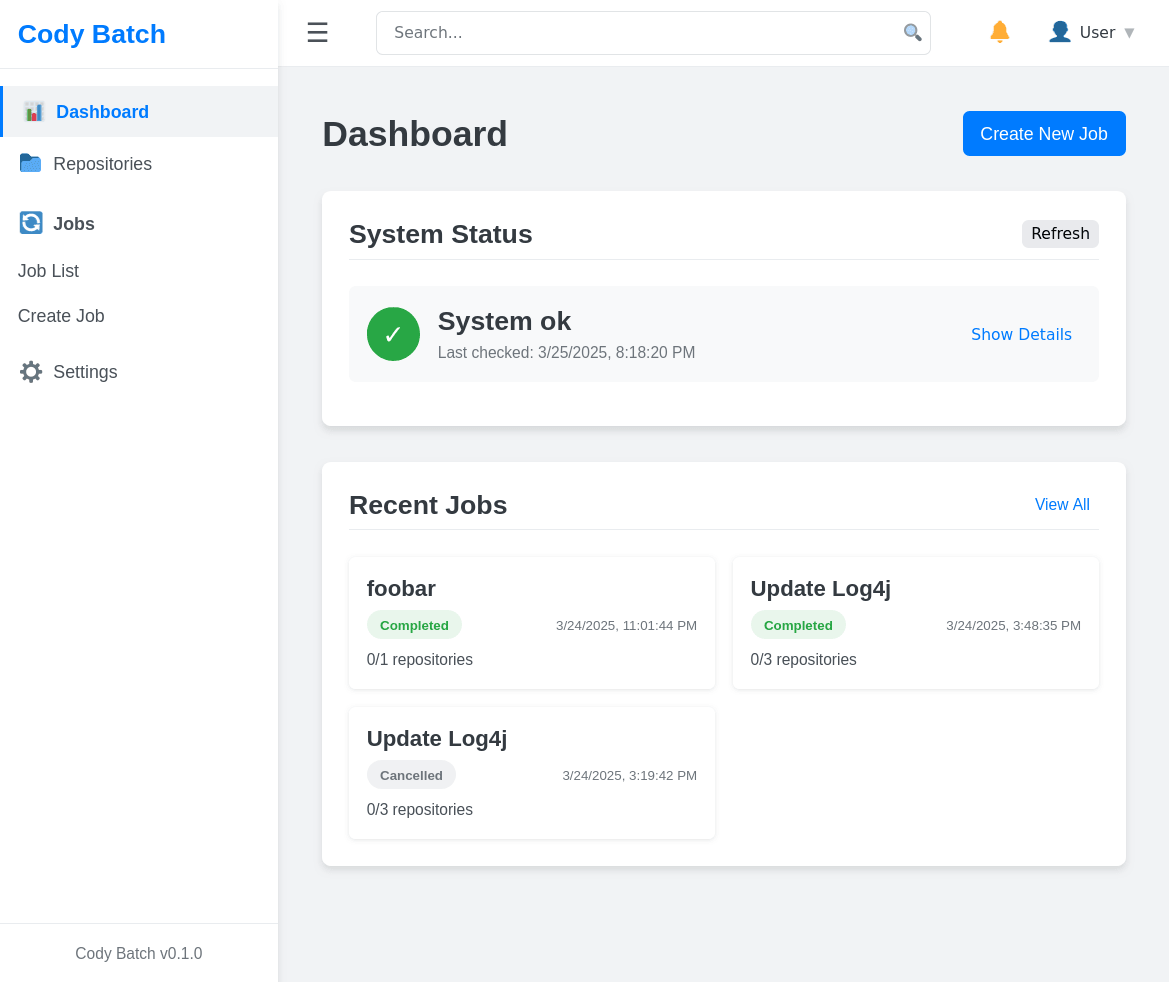

An AI-powered tool that leverages Claude 3.7 to perform bulk changes on GitHub repositories, automatically analyzing code and applying changes based on specific prompts.

Project Demo

Project Metrics

Project Details

Cody Batch increases developer productivity by automating repetitive code maintenance tasks across multiple repositories, demonstrating understanding of Sourcegraph's vision for AI coding agents. It addresses code maintenance at scale, technical debt reduction, and consistency enforcement.

Business Value

- Developer Productivity

- Code Maintenance

Key Features

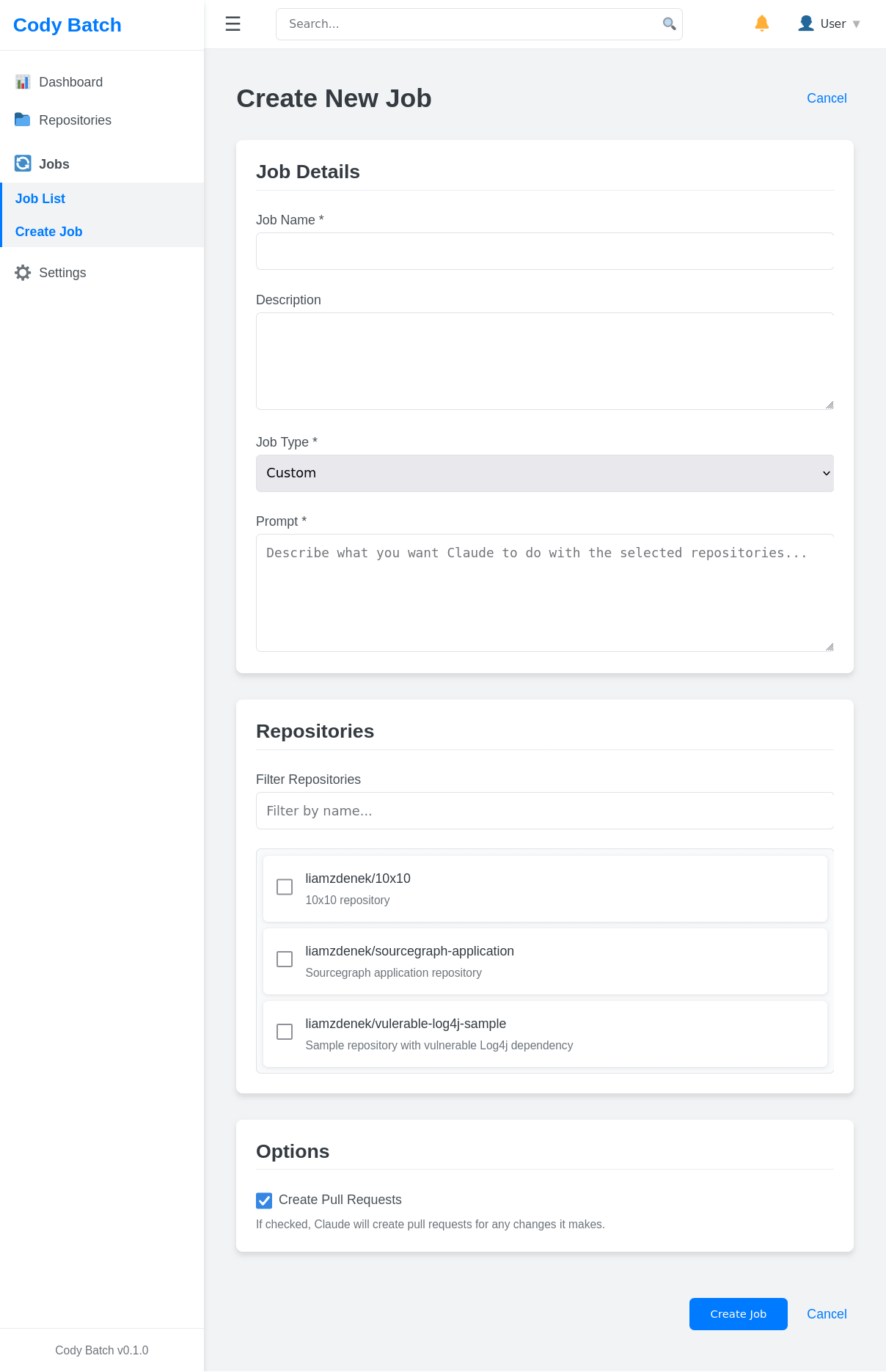

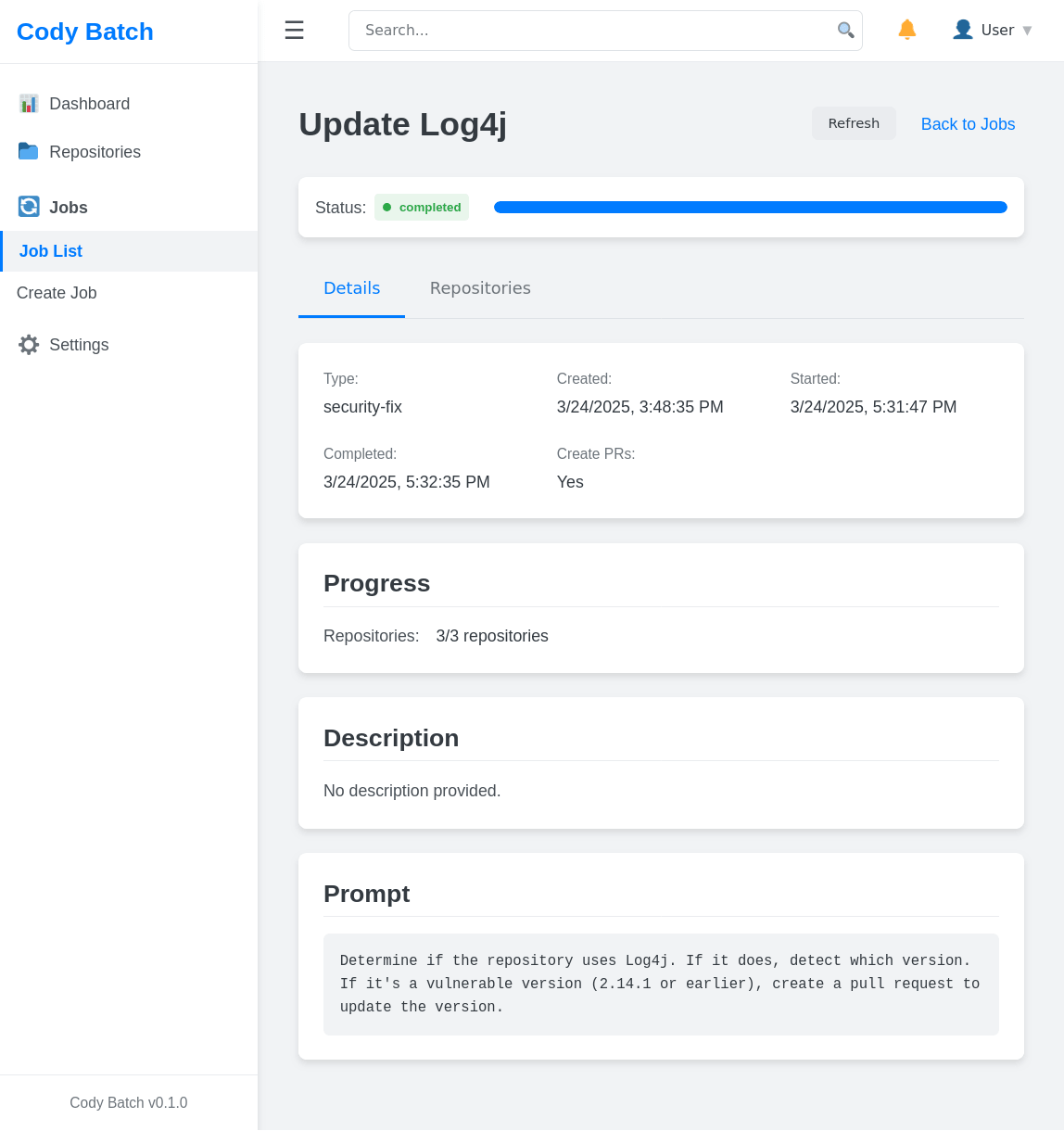

Job Creation Interface

User-friendly form for creating batch change jobs with repository selection, custom prompt input, and option to automatically create pull requests.

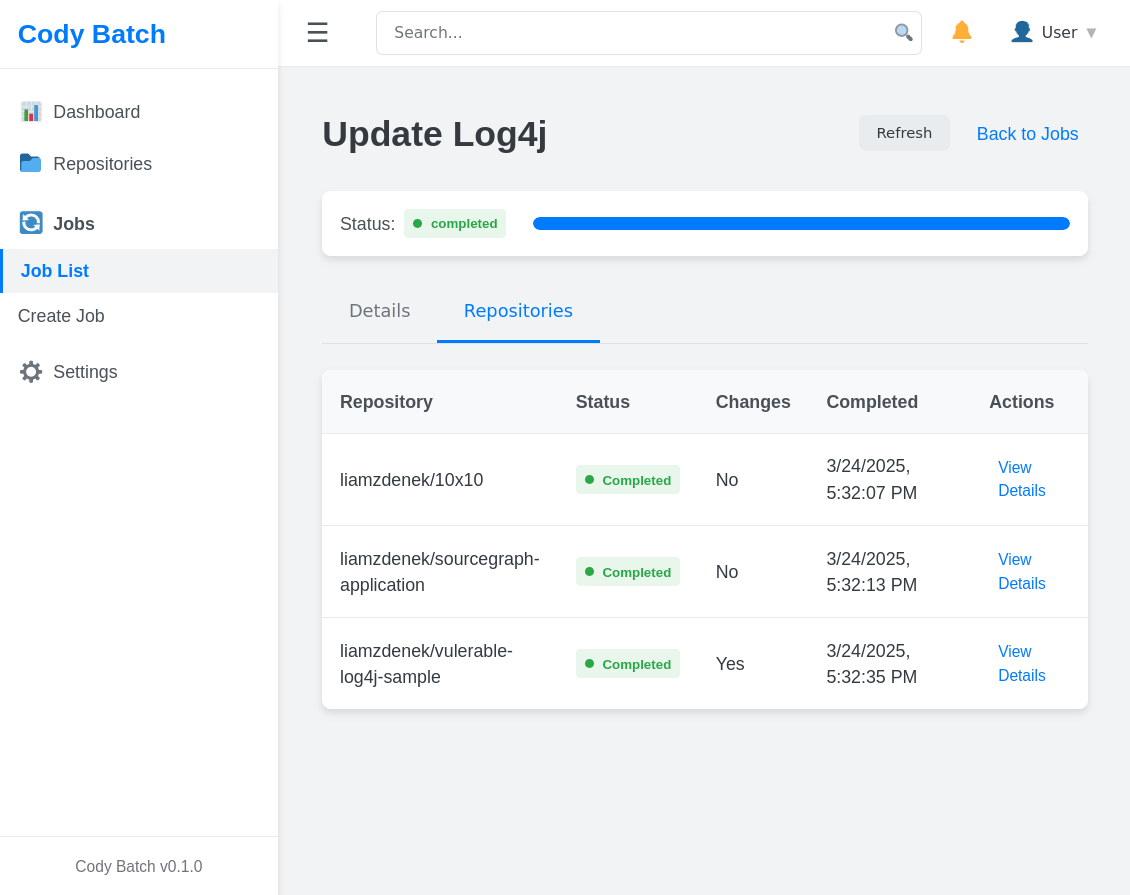

Batch Processing System

AWS Batch for long-running job processing with container-based job execution, parallel processing of multiple repositories, and comprehensive error handling.

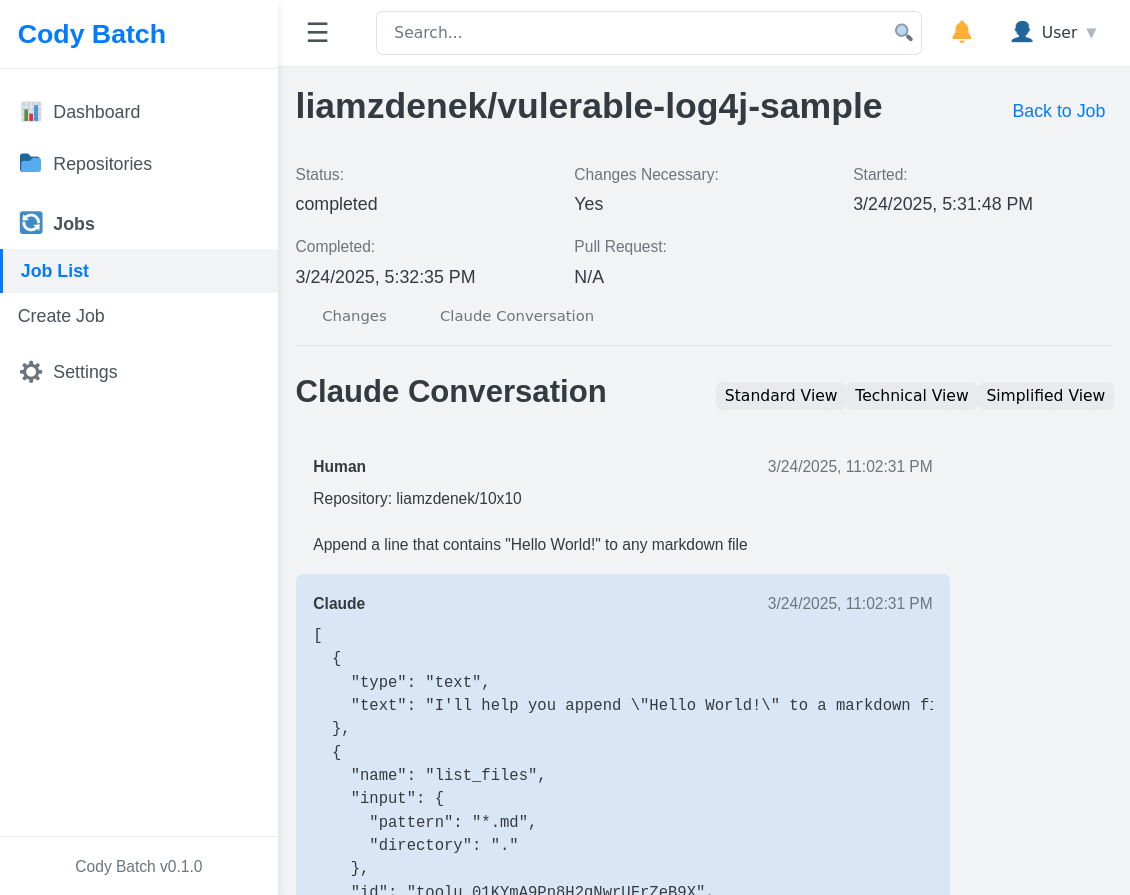

Claude 3.7 Integration

Tool-based interaction for repository analysis, autonomous code modification sessions, and token usage tracking and optimization.

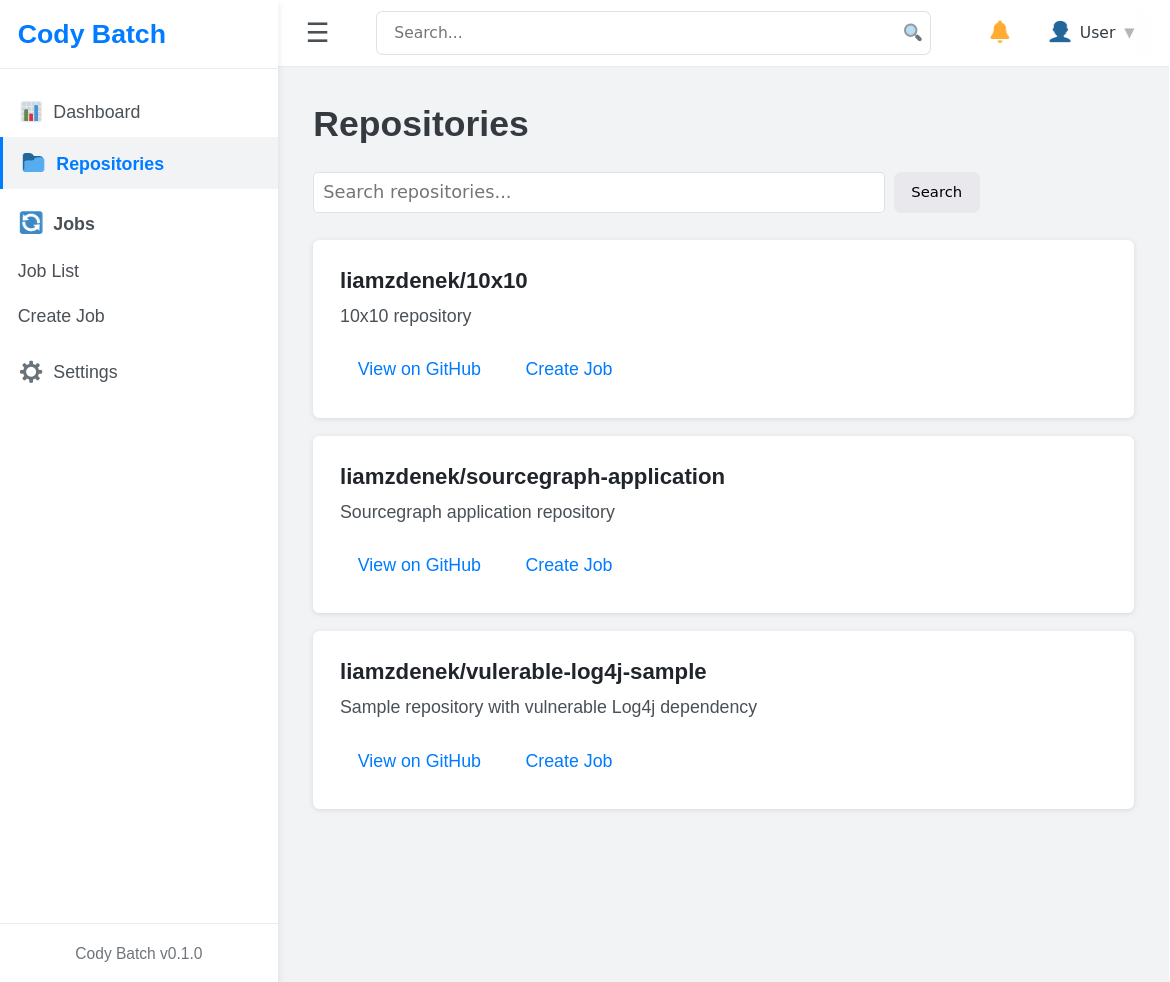

GitHub Integration

Repository scanning and cloning, code analysis and modification, pull request creation for owned repositories, and diff generation for non-owned repositories.

Technologies Used

Frontend

Backend

DevOps

Other

Challenges & Solutions

Long-running job processing exceeding Lambda's 15-minute limit

Implemented AWS Batch for job processing, allowing jobs to run for extended periods while maintaining a serverless architecture for the API.

Claude API integration with proper error handling and token management

Developed a comprehensive Claude client with direct API calls, detailed logging, and token usage tracking, ensuring robust interaction with the Claude 3.7 API.

GitHub API rate limiting for repository operations

Implemented rate limit checking and management in the GitHub client, with exponential backoff and retry logic to handle rate limit constraints.

Key Learnings

- Tool-Based AI Interaction: The tool-based approach for AI interaction proved highly effective for repository operations, providing structured interaction with clear boundaries and better control over the AI's actions.

- AWS Batch for Long-Running Jobs: Using AWS Batch for job processing allowed for handling complex, long-running tasks that would exceed Lambda's timeout limits, while maintaining a serverless architecture for the API.

- Conversation History Storage: Storing complete conversation histories, including tool calls and results, provided valuable context for understanding the AI's reasoning and debugging issues.

- Direct API Calls vs. SDK: Using direct API calls with fetch instead of relying solely on the SDK provided better error handling, debugging, and compatibility with newer Claude models.